The initial idea was simple: develop a mail box system for home with which I could get CallerID notification, play a greeting message and have callers leave messages on my home telephone. This article covers a very simple way to play and record sound to and from a voice modem wave device.

Sound is recorded digitally by sampling the audio signal (waveform) many times per second; commonly 8000, 11050, 22100, 44100, or 48000 times per second. This is described as a sampling rate of 8, 11.05, 22.1, 44.1, or 48 kilohertz (kHz). The higher the sampling rate, the more accurately the sound is defined, and the better the quality. Quality is also affected by the resolution of the digitisation process, usually described 8-bit or 16-bit. An 8-bit number can have one of 256 values (2 to the power of 8), while a 16-bit number has one of 65536 values (2^16). Clearly more bits gives more accuracy, and inherently a greater dynamic range - that is, difference between the loudest and quietest sound which can be coded. The dynamic range of the coding doubles (or is said to increase by 6 decibels (dB)) for each bit. In practice, particularly with cheap soundcards there is commonly some kind of background noise which masks the quieter sounds, and reduces the useful dynamic range.

Table I below shows the sampling rates, resolution, dynamic range and the number of channels required by various types of applications.

| Application | Sampling rate | Resolution | Dynamic range | Channels |

|---|---|---|---|---|

| Telephone quality speech | 8kHz | 8/16-bit | 48dB | Mono |

| CD quality music | 44.1kHz | 16-bit | 96dB | Stereo |

In order to meet the requirement for the MailBox project, I only need to use telephone quality speech at 8 Khz, 16-bit mono, a stream that can play and record sound on my voice modem (a US Robotics 56K Voice PCI modem).

Windows supports three distinct types of multimedia data: MIDI, waveform audio, and video. Considering the requirements of the project, the scope was restricted to waveform audio and, in particular, the .wav file format. In fact, in the Microsoft Windows operating system, most waveform-audio files use the .WAV filename extension. It is the easiest to use though it takes a lot of disk space. For voice modem applications, there seems to be no alternative [My unofficial TAPI FAQ by Bruce Pennypacker]

I will use a subset of the Windows Multimedia API set, the low-level multimedia (wave) API, in order to connect to the sound devices (sound card or voice modem). It includes the symmetrical set of waveOut and waveIn functions described in detail in the Windows Multimedia API Reference help file. IIn addition, I will use the .wav file format and its family of mmio function to read and write the files. All these functions are declared in mmsystem.pas.

This type of waveform audio file is the most common audio file format on Windows PC's. It is the simplest way to store sounds on a computer. They are in Resource Interchange File Format (RIFF), which stores headers and data in variable length "chunks", that always start with a four letter code like "WAVE", or "data". It stores the "data" as PCM (Pulse Code Modulation) data. Almost all of the time a waveform audio file does not compress its PCM data, this makes the files large, but easy and quick to read.

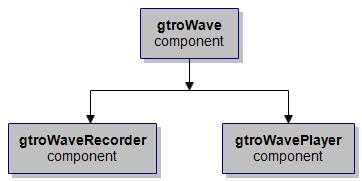

The base component

As shown in Figure 1, all the common procedures, variables and properties are contained in the parent component gtroWave which is derived from TComponent whereas those specific to playing or recording are contained in the child components gtroWavePlayer and gtroWaveRecorder. These are the only one that are registered in the component palette.

|

The code that follows gives a skeleton representation of the declaration of the gtroWave component.

TGtroWave = class(TComponent)

private

...

protected

...

procedure GetWaveOutDevices(var List: TStringList);

procedure GetWaveInDevices(var List: TStringList);

property FileName: string read fFileName write fFileName;

property OnDoneEvent: TNotifyEvent read fDoneEvent write fDoneEvent;

property OnAddBufferEvent: TAddBufferEvent

read fOnAddBufferEvent write fOnAddBufferEvent;

property OnStartRecording: TNotifyEvent

read fOnStartRecording write fOnStartRecording;

property OnWaveFormatEx: TWaveFormatExEvent

read FWaveFormatExEvent write FWaveFormatExEvent;

property OnWaveOutClose: TNotifyEvent

read FOnWaveOutClose write FOnWaveOutClose;

public

...

published

...

end;The only properties left in this listing are protected properties which are made public or published in the child components.

Important note

For the sake of clarity, the code that is displayed in the forthcoming sections has been stripped off of all exception handling and debugging

statements.

The gtroWavePlayer component

The TgtroWavePlayer class inherits from the TGtroWave class. and it contains the procedures, variables and properties needed to read and play the .wav file referred to by Filename. It works as follows.

Reading the .wav file

This component has been designed to play .wav files either through the sound card or through the modem. Playback is initiated in the Start() procedure which uses the Wave API functions waveOutOpen(), waveOutPrepareHeader() and waveOutwrite() after having opened, read and closed the file to be played back.

function TGtroWavePlayer.ReadWavFile(FileName: string): Boolean; [SIMPLIFIED CODE]

begin

mmioHandle := mmioOpen(PChar(FileName), nil, MMIO_READ or MMIO_ALLOCBUF);

RiffCkInfo.ckId := mmioStringToFOURCC('RIFF', 0); // 'RIFF' chunk id

RiffCkInfo.fccType := mmioStringToFOURCC('WAVE', 0);

mmioDescend(mmioHandle, @RiffCkInfo, nil, MMIO_FINDRIFF); // descending into the 'RIFF' chunk

FmtCkInfo.ckId := mmioStringToFOURCC('fmt ', 0); // 'fmt ' chunk id

mmioDescend(mmioHandle, @FmtCkInfo, @RiffCkInfo, MMIO_FINDCHUNK);

mmioRead(mmioHandle, PChar(@WaveFormatEx), SizeOf(TWaveFormatEx));

mmioAscend(mmioHandle, @FmtCkInfo, 0); // ascend one chunk up

FmtCkInfo.ckid := mmioStringToFourCC('data', 0); // initialize 'data' chunk info structure

mmioDescend(mmioHandle, @FmtCkInfo, @RiffCkInfo, MMIO_FINDCHUNK)

dwDataSize:= FmtCkInfo.cksize; // stores the data size

GetMem(fData, dwDataSize); // Allocate memory for the wave data

mmioRead(mmioHandle, fData, dwDataSize))

GetMem(pWaveHeader, sizeof(WAVEHDR)); // pWaveHeader: Address of buffer to contain the data

with pWaveHeader^ do

begin

lpData := fData; // address of the waveform buffer

dwBufferLength := dwDataSize; // length, in bytes, of the buffer

dwFlags := 0; // Flags supplying information about the buffer

dwLoops := 0; // Number of times to play the loop

dwUser := 0; // 32 bits of user data

end;

mmioClose(mmioHandle, 0);

end;The function mmioOpen() opens the file that will be played to the sound device and the opening is followed by a sequence of calls to mmioDescend() and mmioAscend() to read the various chunks of the file. Note that the statement that transfers the data size into the cksize member of the FmtCkInfo structure is used to allocate memory (highlighted above) for the waveform data buffer (I use only one buffer due to the short length of the file) and that this buffer is pointed to by the lpData member of the WaveHeader structure. Once the data has been read into this buffer, the file is closed by the function mmioClose().

Playback

Playback is initiated in the Start() procedure which uses the Wave API functions waveOutOpen(), waveOutPrepareHeader() and waveOutwrite() after having opened, read and closed the file to be played back.

procedure TGtroWavePlayer.Start(const FileName: string; DeviceID: UINT); [SIMPLIFIED CODE]

begin

WomDoneThread:= TWomDone.Create(True); // Creates suspended

WomDoneThread.FreeOnTerminate:= True;

if ReadWavFile(FileName) then

begin

waveOutOpen(@WaveOutHandle, DeviceID, @WaveFormatEx, DWORD

(@WaveOutPrc), Integer(Self), CALLBACK_FUNCTION or WAVE_MAPPED);

waveOutPrepareHeader(WaveOutHandle, pWaveHeader, sizeof(WAVEHDR));

waveOutWrite(WaveOutHandle, pWaveHeader, sizeof(WAVEHDR))

end; // if ReadWavFile

end;The procedure ReadWavFile() is executed first. Upon success, waveOutOpen() opens the sound device and retrieves a handle to the open device which will be used by all other function dealing with the device. It requires six arguments: the address where the function will store the handle of the device, the DeviceID, the address of a WaveFormatEx structure, the address of a fixed callback function, a user-instance data passed to the callback mechanism and a set of flags defining how the device is opened.

The waveOutPrepareHeader() method is then called: it prepares a waveform-audio data block for playback (I don't know what the function does exactly but it needs to be done). Finally, waveOutWrite() is called and sends the data block to the waveform-audio output device.

The Callback function

Note that in the process described up to now, the sound device has not been closed. As a matter of fact, waveOutRead() is not a synchronous function. It returns control to the next executable statement before its job is finished and, meanwhile, runs in a separate thread. At this point, the calling application becomes message-driven (as Windows is). The messages issued by the thread are the following:

- WOM_OPEN - when the device was opened;

- WOM_DONE - when the device is through with the buffer;

- WOM_CLOSE - when the device was closed

As usual in Windows, these messages are sent to a callback function, the address of a which has been transferred to the device as an argument of waveOutOpen().

procedure waveOutPrc(hwo : HWAVEOUT; uMsg : UINT; dwInstance,

dwParam1, dwParam2 : DWORD); stdcall;

begin

CurrInstance:= dwInstance;

TGtroWavePlayer(dwInstance).WaveOutProc(hwo, uMsg, dwParam1, dwParam2);

end;As shown above, the callback function is a global procedure, not a method of the TgtroWavePlayer class (Windows is not object-oriented and does not know what to do with the self parameter that accompanies methods). As a result of this, the only action undertaken by this callback function is to transfer control to the WaveOutProc() method which handles the messages through a case as follows:

procedure TGtroWavePlayer.WaveOutProc(hwo: HWAVEOUT; uMsg: UINT; dwParam1,

dwParam2: DWORD);

begin

case uMsg of

WOM_OPEN: begin end;

WOM_CLOSE: TriggerOnDoneEvent; // WaveOut device is closed

WOM_DONE: WomDoneThread.Resume; // start the thread which closes the device.

end; // case

end;As far as what can be executed in the callback function, the Multimedia Reference help file is clear: "Applications should not call any system-defined functions from inside a callback function" since such action can hang the process.

As a result of this, a thread called WomDoneThread has been used. It is created "suspended" at the beginning of the Start() method with its property FreeOnTerminate set to "true" so that it destroys itself once terminated. When the WOM_DONE message is received by the callback function, the thread is resumed.

The WomDone thread

What does this thread do? Like all threads, when it is resumed, it executes its Execute() method. In this case, it call Synchronize(). Why does it call this method? Simple! When using objects from the VCL object hierarchy, their properties and methods are not guaranteed to be thread-safe. If all objects access their properties and execute their methods within a single thread, there is need not worry about your objects interfering with each other but in order to use the main thread, a separate routine that performs the required actions (StopIT) is required and this separate routine must be called from within the threads Synchronize() method. This is thread-safe and this is exactly what is done by the code presented hereunder

procedure TWomDone.Execute;

begin

inherited;

Synchronize(StopIt);

end;Synchronize() is a method of Tthread which takes as a parameter another parameterless method which is to be executed. It is then guaranteed that the code in the parameterless method will be executed as a result of the call to Synchronize(), and will not conflict with the VCL thread. However, Synchronize() uses

procedure TWomDone.StopIt;

begin

TGtroWavePlayer(CurrInstance).Stop;

end;and the Stop() method of the TGtroWavePlayer class is called.

However, this call to Synchronize() does not work all the time. When the component was executed in a DLL (or an in-process server) that was not window-based, Synchronize() hanged and never returned each time it was called. This is because Synchronize() uses the message loop and waits for the main thread to enter the message loop to execute the method passed to it as an argument. As far as messages are concerned, no window means no message loop and therefore no way for Synchronize() to have the method passed to it as an argument executed. My answer to this was to call Stop() directly in the Execute() method of the thread.

procedure TWomDone.Execute;

begin

inherited;

TGtroWavePlayer(CurrInstance).Stop;

end;Stopping the playback

The Stop() method terminates the playback of the wave file on the device.

procedure TGtroWavePlayer.Stop;

begin

WaveOutReset(WaveOutHandle);

WaveOutUnprepareHeader(WaveOutHandle, pWaveHeader, sizeof(WAVEHDR)); // unprepare header

if fData <> nil then

begin

FreeMem(fData);

fData:= nil;

end;

if pWaveHeader <> nil then

begin

FreeMem(pWaveHeader);

pWaveHeader:= nil;

end;

WaveOutClose(WaveOutHandle); // Close the device

if Assigned(FOnWaveOutClose) then FOnWaveOutClose(Self);

end;First, it reset the playback with waveOutReset(), unprepare the header with waveOutUnprepareHeader(), clean up its mess and finally closes the device with waveOutClose().

Interrupting the playback

All the above discussion has dealt with the case when the buffer/data block has been exhausted and the playback has terminated. However, the TGtroWavePlayer class provides a public method to interrupt the playback before it is terminated. The code of this method simply resume the WomDoneThread as the callback function does it when the WOM_DONE message is received.

procedure TGtroWavePlayer.Interrupt;

begin

WomDoneThread.Resume;

end;The gtroWaveRecorder component

As it was the case for the TgtroWavePlayer class, the TgtroWaveRecorder class inherits from the TGtroWave class and it contains the procedures, variables and properties needed to record and save the waveform to the .wav file referred to by Filename. It works as follows.

It could be expected that recording should be the reverse of playback. It is nearly so except for some details.

Starting the recording

Recording is initiated either manually or programmatically by the public method Start(). It requires two arguments: the name of the file to fill and the identification of the device to get the data from (sound card, modem).

procedure TGtroWaveRecorder.Start(FileName: string; DeviceID: UINT);

begin

...// Define the format of recording

DefineWaveFormat; // define the wave format and allocates memory

WaveInOpen(@WaveInHandle, // handle identifying the open waveform-audio input device.

DeviceID, // Identifier of the waveform-audio input device to open

pFormat, // Address of a WAVEFORMATEX structure that identifies the desired format

DWORD(@waveInPrc), // Address of a fixed callback function

Integer(Self), // User-instance data passed to the callback mechanism

CALLBACK_FUNCTION); // The dwCallback parameter is a callback procedure address

InitBuffers(pFormat);

// Prepare to write to file

RiffId := mmioStringToFOURCC('RIFF', 0); //initialize the chunk id values

WaveId := mmioStringToFOURCC('WAVE', 0);

FmtId := mmioStringToFOURCC('fmt ', 0);

FactID:= mmioStringToFOURCC('Fact ', 0);

DataId := mmioStringToFOURCC('data', 0);

// initialise chunk info structures

FillChar(RiffChunkInfo, SizeOf(TMMCkInfo), #0);

FillChar(SubChunkInfo , SizeOf(TMMCkInfo), #0);

mmioHandle:= mmioOpen(PChar(FileName), nil, MMIO_CREATE or MMIO_READWRITE or MMIO_ALLOCBUF); // Open file

RiffChunkInfo.ckId := RiffId; // add 'RIFF' chunk and 'WAVE' identifier

RiffChunkInfo.fccType := WaveId;

mmioCreateChunk(mmioHandle, @RiffChunkInfo, MMIO_CREATERIFF)

SubChunkInfo.ckId := FmtId; // add and write 'fmt' chunk

// creates a chunk in a RIFF file that was opened by using the mmioOpen function

mmioCreateChunk(mmioHandle, @SubChunkInfo, 0);

// the current file position is the beginning of the data portion of the new chunk

// writes a specified number of bytes to a file opened by using the mmioOpen function

mmioWrite(mmioHandle, PChar(pFormat), SizeOf(TWaveFormatEx));

// Current file position is incremented by the number of bytes written

mmioAscend(mmioHandle, @SubChunkInfo, 0);

SubChunkInfo.ckId := FactID;

mmioCreateChunk(mmioHandle, @SubChunkInfo, 0);

mmioWrite(mmioHandle, PChar(pFormat), SizeOf(TWaveFormatEx))

mmioAscend(mmioHandle, @SubChunkInfo, 0);

SubChunkInfo.ckId := DataId; // add and write 'data' chunk

mmioCreateChunk(mmioHandle, @SubChunkInfo, 0);

// wave file is now positioned ready to receive wave data bytes

WaveInStart(WaveInHandle); // starts input from the waveform-audio input device.

FreeMem(pFormat);

end;First, the format of the sound must be defined. For use on the modem, I have selected 8.0 KHz 16-bit PCM mono as shown in the listing of the DefineWaveFormat() method listed hereunder.

procedure TGtroWaveRecorder.DefineWaveFormat;

begin

pFormat := AllocMem(SizeOf(TWaveFormatEx));

with pFormat^ do // these relationships hold only for WAVE_FORMAT_PCM formats

begin

wFormatTag := WAVE_FORMAT_PCM;

nChannels := 1;

nSamplesPerSec := 8000;

wBitsPerSample := 16;

nBlockAlign := 2;

nAvgBytesPerSec := 16000;

cbSize := 0;

end;

end;waveInOpen() is then called to open the device. InitBuffers() follows.

procedure TGtroWaveRecorder.InitBuffers(pFormat: pWaveFormatEx);

var // Prepares for buffers and headers

i: Integer;

begin

with pFormat^ do

BufferSize:= Round((BufferTimeLength * nAvgBytesPerSec)/nBlockAlign) * nBlockAlign;

for i:= 0 to BUFFERCOUNT - 1 do

begin

Buffers[i]:= AllocMem(BufferSize);

Headers[i]:= AllocMem(SizeOf(TWaveHdr));

// Initialize headers

Headers[i]^.lpData:= Buffers[i];

Headers[i]^.dwBufferLength:= BufferSize;

Headers[i]^.dwUser:= i;

// prepares a buffer for waveform-audio input.

WaveInPrepareHeader(WaveInHandle, Headers[i], SizeOf(TWaveHdr));

// add all buffer to waveIn buffer queue

WaveInAddBuffer(WaveInHandle, Headers[i], SizeOf(TWaveHdr));

end; // for i:= 0 to BUFFERCOUNT - 1

end;Remember that playback used only one buffer of fixed length (the length of the data in the file to playback was known before playback). For the purpose of recording, at least two buffers must be used as the length of the recording is not know ahead of time and buffers are streamed to the device buffer by buffer. In this component, I have used three buffers (value of the constant BUFFERCOUNT).

InitBuffers() defines the length of each buffers (here BufferTimeLength is set to 0.3 sec) and memory is allocated for the buffers and the headers. waveInPrepareHeader() is then called and the input buffers are sent to the waveform-audio input device. When the buffers are filled, the component is notified.

The remaining of the Start() method consist in preparing the target file to format the file with sequences of mmio functions. Once this is done, waveInStart() initiates input from the waveform-audio input device.

Stopping the recording

Stop() is a public method of the class which is called by the application that uses the component. Its code follows.

procedure TGtroWaveRecorder.Stop;

begin

WaveInReset(WaveInHandle);

WaveInStop(WaveInHandle);

Cleanup;

mmioAscend(mmioHandle, @SubChunkInfo, 0); // write correct value for 'data' chunk length

mmioAscend(mmioHandle, @RiffChunkInfo, 0); // write correct value for 'RIFF' chunk length

mmioClose(mmioHandle, 0); // close the wave file

WaveInClose(WaveInHandle);

end;With waveInReset(), input on the waveform-audio input device is stopped and the current position is reset to zero whereas the role of waveInStop() is obvious: the device is closed. Cleanup() clears the mess. and the mmioAscend() functions that follow correct the ckSize member of the TMMCKINFO structure at the address of SubChuncInfo and RiffChunkInfo to the final lengths of the data and RIFF chunks. Finally, the file is closed.

procedure TGtroWaveRecorder.Cleanup;

var

i: Integer;

begin

for i:= 0 to BUFFERCOUNT - 1 do

begin

if WaveInUnprepareHeader(WaveInHandle, Headers[i], SizeOf(TWaveHdr))

<> MMSYSERR_NOERROR then

FreeMem(Buffers[i]);

FreeMem(Headers[i]);

end; // for i:= 0 to BUFFERCOUNT - 1

end;In the listing above, the constant BUFFERCOUNT is equal to three.

Callback function

During recording, the component is notified of three events:

- WIM_OPEN - when the waveform-audio input device is opened.;

- WIM_DATA- when waveform-audio data is present in the input buffer and the buffer is being returned to the application. The message can be sent when the buffer is full or after the waveInReset() function is called.

- WIM_CLOSE - when the waveform-audio input device is closed. The device handle is no longer valid after this message has been sent.

procedure waveInPrc(hwo : HWAVEIN; uMsg : UINT; dwInstance,

dwParam1, dwParam2 : DWORD); stdcall;

begin

TGtroWaveRecorder(dwInstance).WaveInProc(hwo, uMsg, dwParam1, dwParam2);

end;which returns control to the waveInProc() method of the component for proper handling.

procedure TGtroWaveRecorder.WaveInProc(WaveInHandle: HWAVEIN; uMsg: UINT; dwParam1,

dwParam2: DWORD);

var

WaveHdr: TWaveHdr;

WriteBytes: Integer;

Level: Integer;

begin

case uMsg of

WIM_OPEN: // device is opened using the waveInOpen function.

TriggerOnStartRecording;

WIM_DATA: // device is finished with a data block sent using the waveInAddBuffer function.

begin

WaveHdr:= pWaveHdr(dwParam1)^; // dwParam1 is a DWORD ???

if WaveHdr.dwBytesRecorded > 0 then

begin

//write returned buffer to wave file

WriteBytes := mmioWrite(mmioHandle, WaveHdr.lpData, WaveHdr.dwBufferLength);

// count bytes for 'fact' chunk data calculation

Inc(BytesWritten, WriteBytes);

// add the used buffer back to the wavein queue

if WaveInAddBuffer(WaveInHandle, pWaveHdr(dwParam1), SizeOf(TWaveHdr))

<> MMSYSERR_NOERROR then

raise Exception.Create('Unable to add Buffer');

Level:= RecorderDetectSilence(WaveHdr.lpData, WriteBytes);

TriggerAddBufferEvent(dwParam1, BytesWritten, Level);

Application.ProcessMessages;

end; {if WaveHdr.dwBytesRecorded > 0}

end; // WIM_DATA

WIM_CLOSE: // device is closed using the waveInClose function.

TriggerOnDoneEvent;

end; // Case

end;For the WIM_OPEN and WIM_CLOSE messages, the component simply informs the calling application of the event anmd the calling application can then handle the event.

The WIM_DATA message is handled within the component. A data buffer has been received, it is written into the target file by the mmioWrite() function, and the number of bytes written is stored in a member variable. Conditional to the value of the boolean member variable FillingInBuffers (set to true in Start() and to false in Stop()), the buffer is streamed back to the device using waveInAddBuffer(). Finally, the member variable Level is set by the RecorderDetectSilence() method which is discussed in the next section.

Detecting silence

The following method is called by the callback function each time the sound device is finished with a data block using the waveInAddBuffer() function and the WIM_DATA message is sent to the callback function.

function TGtroWaveRecorder.RecorderDetectSilence(lpData: PChar; Count: Integer): Integer;

var // lpData is a pChar of length Count

i, Level, NCount: Integer;

p: pSmallIntArray;

begin

p:= pSmallIntArray(lpData); // Convert PChar into an array of SmallInt

NCount:= Count div 2;

Level:= 0;

for i:= 0 to NCount - 1 do

Level:= Level + Abs(p^[i]);

Result:= Level div NCount;

end;This data block is filled with PChar type (8-bit) data while sample length is 16-bit. Therefore, this procedure takes a pointer to the buffer containing PChar and the length of the buffer (in bytes) and type cast it to point to an array of smallint, each element corresponding to a sample (16-bit) of the waveform. The pSmallIntArray is defined as follows:

type

pSmallIntArray = ^TSmallIntArray;

TSmallIntArray = array[0..16383] of SmallInt;Then this array is read, the sum of the absolute value of each sample is computed and divided by the number of samples. Such an average is proportional to the level of signal. This value is returned to the calling application through the OnAddBufferEvent event and can be used to detect silence on the line. The determination of the threshold and the number of data blocks that will determine silence is left to the calling application.

Conclusion

I needed to develop simple Play/Record .wav files components for a Mail Box project in development and this is the result. For me, it was an introduction to low-level sound programming. The component is minimal but it provides the capabilities which are required to play and record .wav files to and from a voice modem. Obviously, it will also play and record to and from any sound card.

The source code can be downloaded here. The archive file consists of two files: gtroWave.pas which contains the code of the components and gtroWave.dcr which contains the icons that will display in the component palette. Uncompress them in any folder, load them in a design package, compile and install. That's all you need to do. Good luck and have fun.

The next article shows how these components were integrated in the TGtroTAPI component.

Annexes

What about chunks?

The WAVE file format is a file format for storing digital audio (waveform) data. It supports a variety of bit resolution, sample rate and channels of audio. Technically speaking, a wave file is a collection of different types of chunks. A chunk has the following format (derived from mmsystem.pss):

TMMCKInfo = record

ckid: FOURCC; { chunk ID }

cksize: DWORD; { chunk size }

fccType: FOURCC; { form type or list type }

dwDataOffset: DWORD; { offset of data portion of chunk }

dwFlags: DWORD; { flags used by MMIO functions }

end;The file must be comprised of the following three "chunks":

- the RIFF chunk that identifies the file as a RIFF/WAVE file and always begin the file.

- the Format chunk that contains important parameters describing the waveform such as its sample rate. The ID is always "fmt ".

- the Data chunk which contains the actual waveform data. Of course it starts with the four bytes "data", and is followed by a DWORD that describes the length of the data. After that all that is left is the actual PCM data.

- Compressed formats must have a Fact chunk which contains a DWORD indicating the size (in sample points) of the waveform after it has been decompressed. This chunk is required for all WAVE formats other than WAVE_FORMAT_PCM. It stores file dependent information about the contents of the WAVE data. It currently specifies the time length of the data in samples.

US Robotics chipset

The US Robotics chipset based modems can play/record 8KHZ, 8 bit, mono PCM encoded WAV files. Some older models only support the IMA ADPCM format. With modems that support the 8KHZ, 8 bit, mono PCM format, no conversion is necessary, and the modem will play back and record this format directly.